This is the second part of our article about Blockchain consortiums focusing on the enterprise market. Part I of this article can be found here.

In Part I, we covered Linux Foundation’s HyperLedger Project which acts as an umbrella for Fabric, Intel Sawtooth Lake, and Iroha. We also that R3’s Corda and Digital Asset Holding’s offerings. In this part we will cover the following three players:

- Chain

- Ripple

- Enterprise Ethereum

Chain

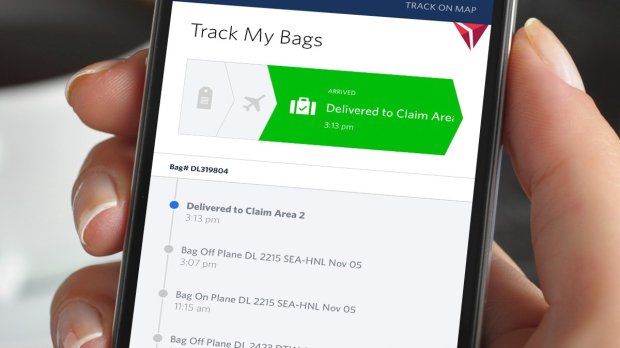

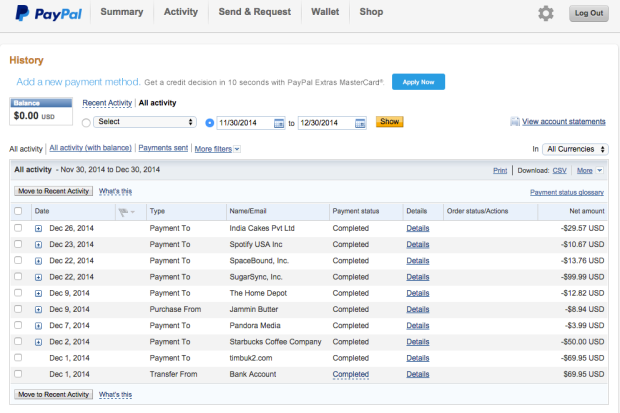

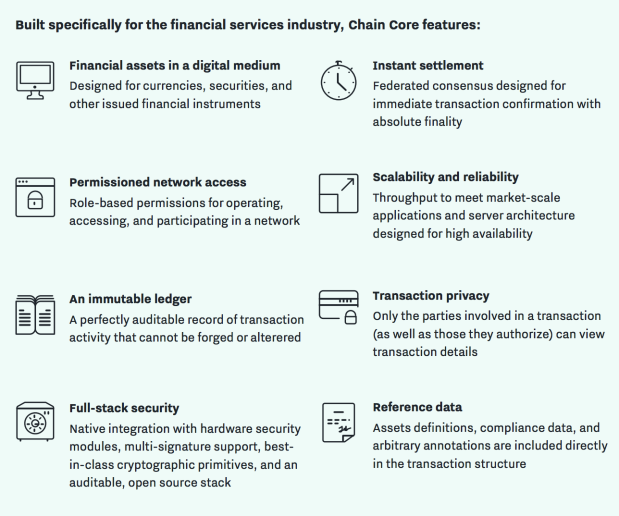

Chain is a Silicon Valley startup that provides Enterprise Blockchain solutions. Chain offers an enterprise-grade platform called Chain Core that allows companies to build and launch their own permissioned blockchains. Chain Core is built based on the Chain Protocol which defines how assets are issued, transferred, and controlled on a blockchain network. It allows a single entity or a group of organizations to operate a network, supports the coexistence of multiple types of assets, and is interoperable with other independent networks.

Chain Core SDK is available in three languages (Java, Ruby, and Node.js) and provides a robust set of functionality to create enterprise applications that require a permissioned Blockchain. Chain has put a significant amount of effort in building and documenting the APIs. By abstracting the technical details that may impose a steep learning curve when it comes to learning DLT, Chain has done a great job with providing simplified libraries which will have an enterprise developer up and running in a minimal amount of time.

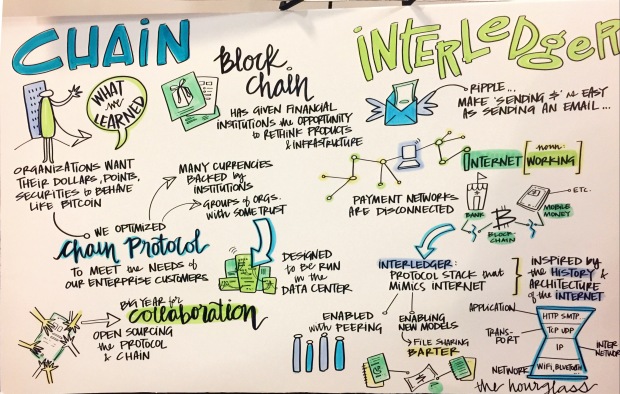

Image Credit: Chain.com

Chain could have stopped at just releasing the developer documentation – but they went an extra step and open sourced their Chain protocol as well. This allows third party developers to inspect and view the protocol specifications and build additional adapters and bridges to other popular blockchain networks and solutions in the market.

Chain has managed to demonstrate their success by building a fully permissioned Blockchain-based solution for Visa called B2B Connect, that gives financial institutions a simple, fast and secure way to process business-to-business payments globally. Chain has also managed to gather investments from NASDAQ, Fiserv, Capital One, Citi, Fidelity, First Data, Orange etc. With its developer and enterprise-friendly platform, Chain provides an easy entry to DLT technologies for enterprises that are looking to experiment with Blockchains.

The foundation of Chain platform includes the Chain Virtual Machine(CVM) that executes the smart contract programs written in Java, Ruby or Node. These high-level language smart contracts/programs allow business logic to be executed on chain using the CVM instruction set. These programs are Turing Complete; to guarantee that the contract does not get stuck in an infinite loop, the chain virtual machine terminates contract code that executes more than a run limit time. (Similar to the ‘gas’ that powers ethereum smart contracts).

Image Credit: Construct 2017, Coindesk

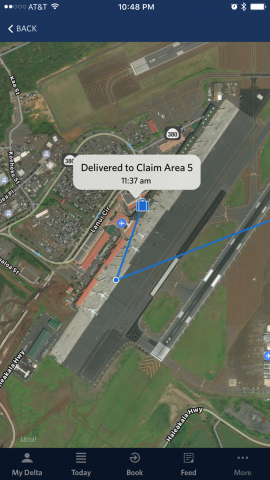

Ripple/Interledger:

Ripple is one of the first successful Distributed Ledger technology companies that has managed to successfully integrate with financial institutions around the world to solve the problem of faster cross-border payments. Sending money from one country to the other can be a frustrating experience even in today’s standards. Almost all of the banks in the world today use SWIFT technology to move money across borders.

An average SWIFT payment takes days and not hours to settle. Bank to Bank transfer is usually 1-2 days (keeping into consideration time differences, if applicable). Depending on the size of the bank and their presence in the receiver country, a correspondent bank may be involved.

Ripple attempts to eradicate this delay by providing near real-time settlement times for cross-border money movement. By their own statement:

Ripple’s solution is built around an open, neutral protocol (Interledger Protocol or ILP) to power payments across different ledgers and networks globally. It offers a cryptographically secure end-to-end payment flow with transaction immutability and information redundancy. Architected to fit within a bank’s existing infrastructure, Ripple is designed to comply with risk, privacy and compliance requirements.

Interledger Protocol(ILP) – ILP serves as the backbone of Ripple technology. ILP strongly borrows many of the battle-tested ideas from Internet standards (RFC 1122, RFC 1123 and RFC 1009) that exist today. ILP is an open suite of protocols for connecting ledgers of different types of digital wallets to national payment systems and other blockchains. A detailed overview of the ILP protocol can be found in a white paper here.

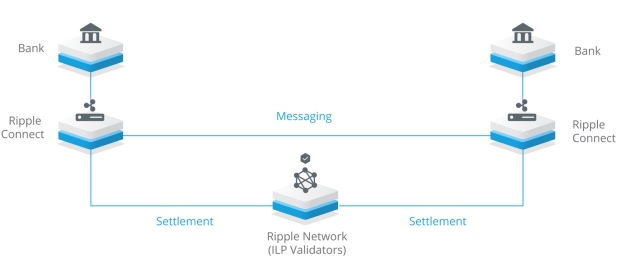

Ripple Connect – Ripple Connect acts as the glue to connect various Interledgers operated by FI clients around the world. By linking the ledgers of FI’s through ILP for real-time settlement of cross-border payments, it preserves the ledger and transaction privacy of the financial institution. It also provides a way for banks to exchange originator and beneficiary information, fees and the estimated delivery time of the payment before it is initiated thus providing transactional visibility.

Image Credit: ripple.com

Image Credit: ripple.com

Ripple has also built a sample ILP client for developers along with supporting documentation that shows how to use ILP. A good overview of Interledger development documentation can be found here. Complete documentation about developing for Ripple platform can be found here.

Ripple has managed to successfully prove that DLT can be used to solve a real-world problem of cross-border payments. They are one of the few companies who has managed to acquire BitLicense – a virtual currency license from the New York State Department of Financial Services.

Here is a list of Ripple’s Financial Institutions clients who are either using it in production or testing the technology for cross-border money movement:

Image Credit: Ripple.com

Enterprise Ethereum:

Enterprise Ethereum is technically the new kid on the Enterprise Blockchain Consortium Block; however, Ethereum as a platform has a robust base of credentials to bring a fight to the ring. Enterprises have shown significant interest in Ethereum as a platform and many innovation labs have been testing a private deployment of Ethereum to understand the technology as well as the use cases it can solve.

Jeremey Millar from ConsenSys elegantly presents the merits of using Ethereum in an Enterprise setting:

Ethereum is arguably, the most commonly used blockchain technology for enterprise development today. With more than 20,000 developers globally, the benefits of a public chain holding roughly $1bn of value, and an emerging open source ecosystem of development tools, it is little wonder that Accenture observed ‘every self-respecting innovation lab is running and experimenting with Ethereum’. Cloud vendors are also supporting Ethereum as a first class citizen: Alibaba Cloud, Microsoft Azure, RedHat OpenShift, Pivotal CloudFoundry all feature Ethereum as one of their, if not the primary blockchain offering.

Enterprise Ethereum (EE) brings forth a very crucial difference between the technology used by players in this space – Blockchain vs. Distributed Ledger. While these two technologies have been interchangeably used by many in the field, there is a subtle difference that catches folks off-guard. Antony Lewis of R3 says:

All blockchains are distributed ledgers, but not all distributed ledgers are blockchains!

Enterprise Ethereum attempts to bring the technology behind Ethereum into enterprises while the other players have redesigned the idea behind Blockchains to fit the enterprise needs. It is still early in the game to see how Enterprise Ethereum will evolve as not much details have been published except the launch day webcast (7 hours). Around the first hour, Vitalik Buterin talks about the Ethereum roadmap.

We also see the various alliance partners in various panels talking about what Ethereum in Enterprise means to them and the reason why they are part of it. EE has launched with a significant number of important players in the space – most notably JP Morgan, who also have announced the launch of their Ethereum based blockchain called Quorum.

Image Credit: http://entethalliance.org/

Conclusion:

The Enterprise Blockchain/Distributed Ledger space is starting to become competitive with a good number of offerings. There are are other players like Multichain and Monax, which we have not covered here which deserve a close watch. Like relational databases that changed the way how enterprise applications are built, Blockchains and Distributed ledgers will shape the future of how enterprise applications are designed and built for the next generation of applications.

2007 was a watershed moment in technology. The iPhone redefined the concept of personal computing and the world as we knew it was never the same. In just a decade, we have seen the proliferation of smart devices in every aspects of our life. We take for granted the various conveniences offered by our smart phones.

2007 was a watershed moment in technology. The iPhone redefined the concept of personal computing and the world as we knew it was never the same. In just a decade, we have seen the proliferation of smart devices in every aspects of our life. We take for granted the various conveniences offered by our smart phones.